Text Analysis

May 19, 2025

new package tidytext

Survey data file

Short Open Ended Question

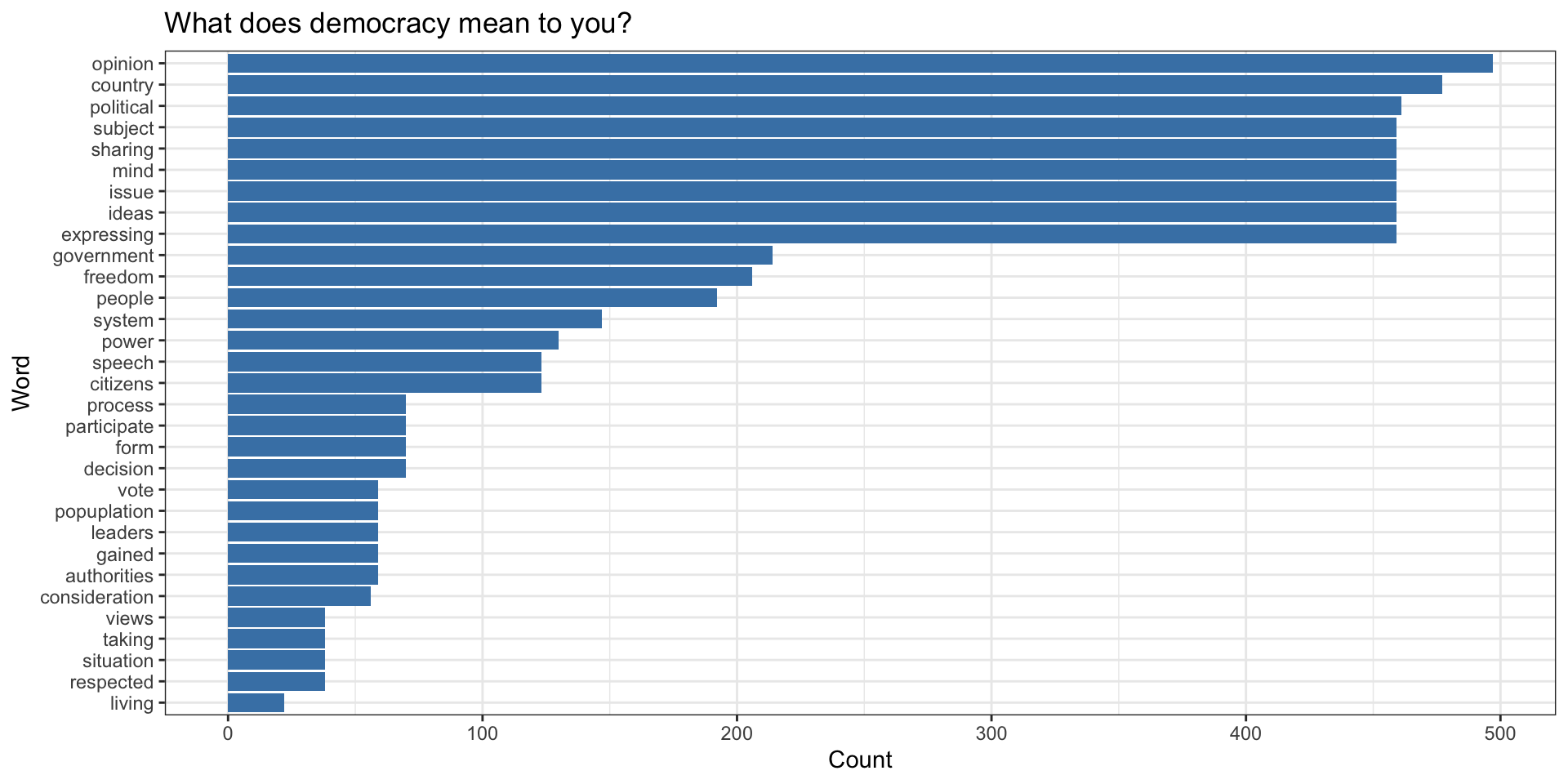

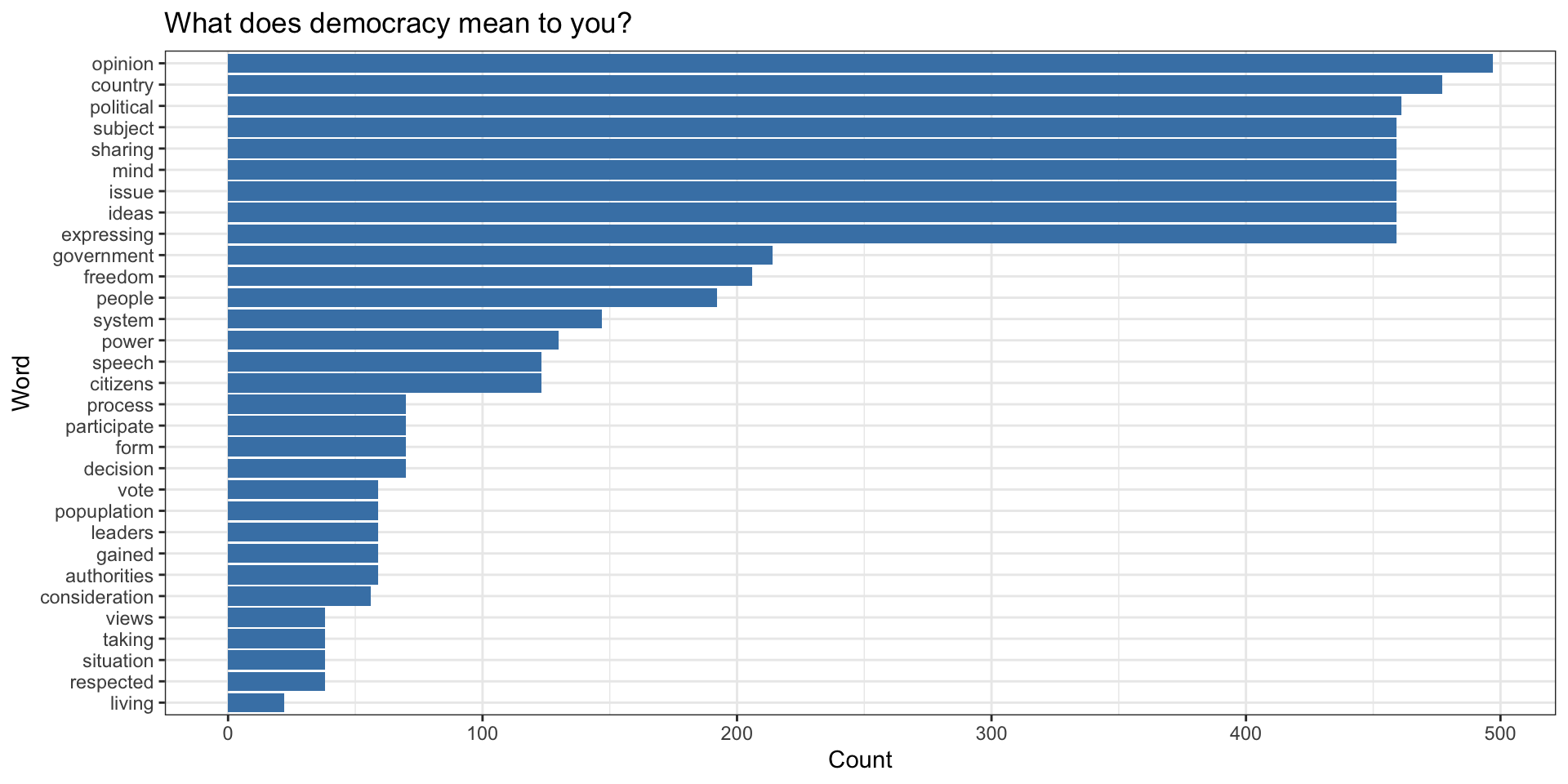

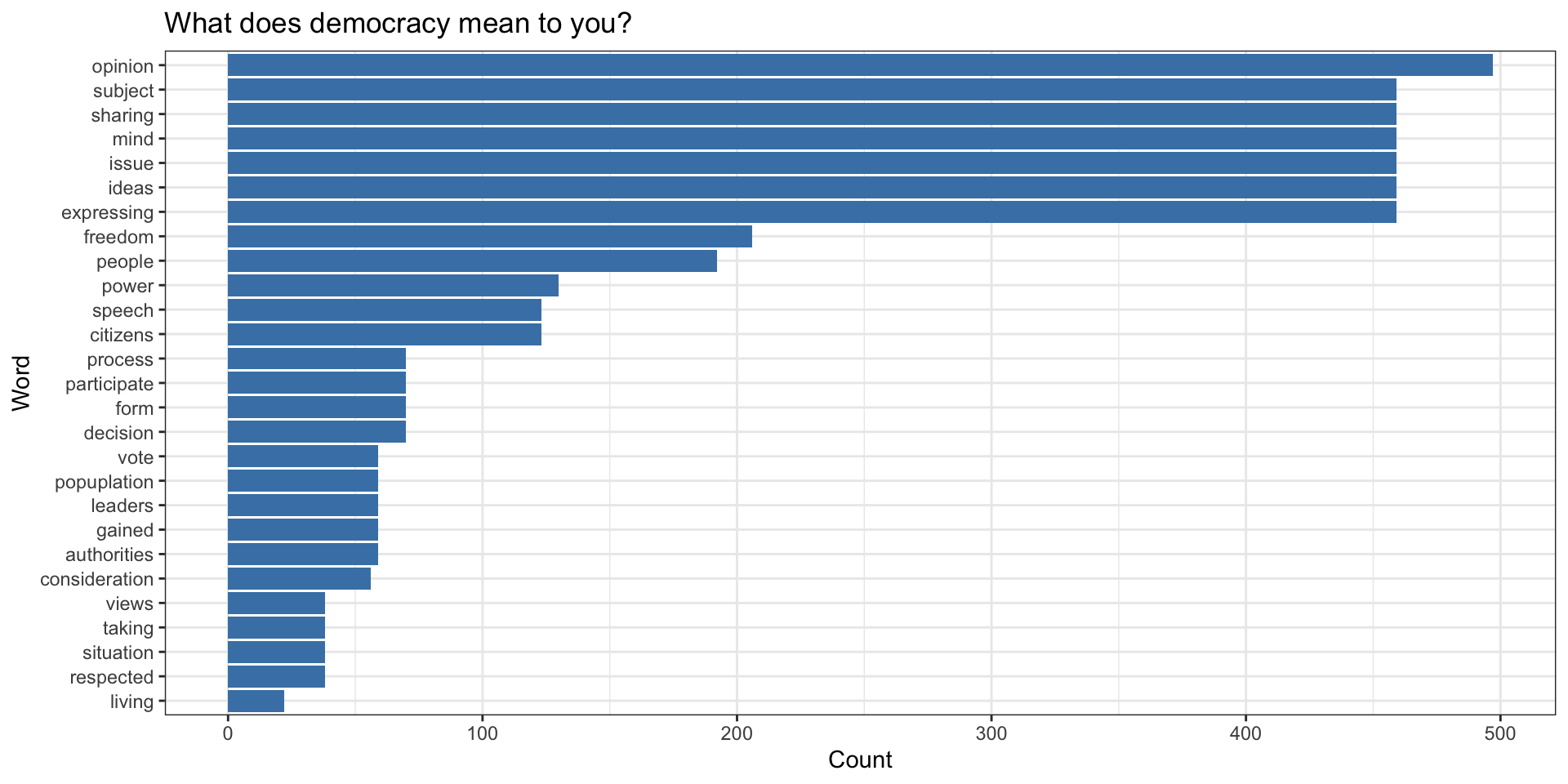

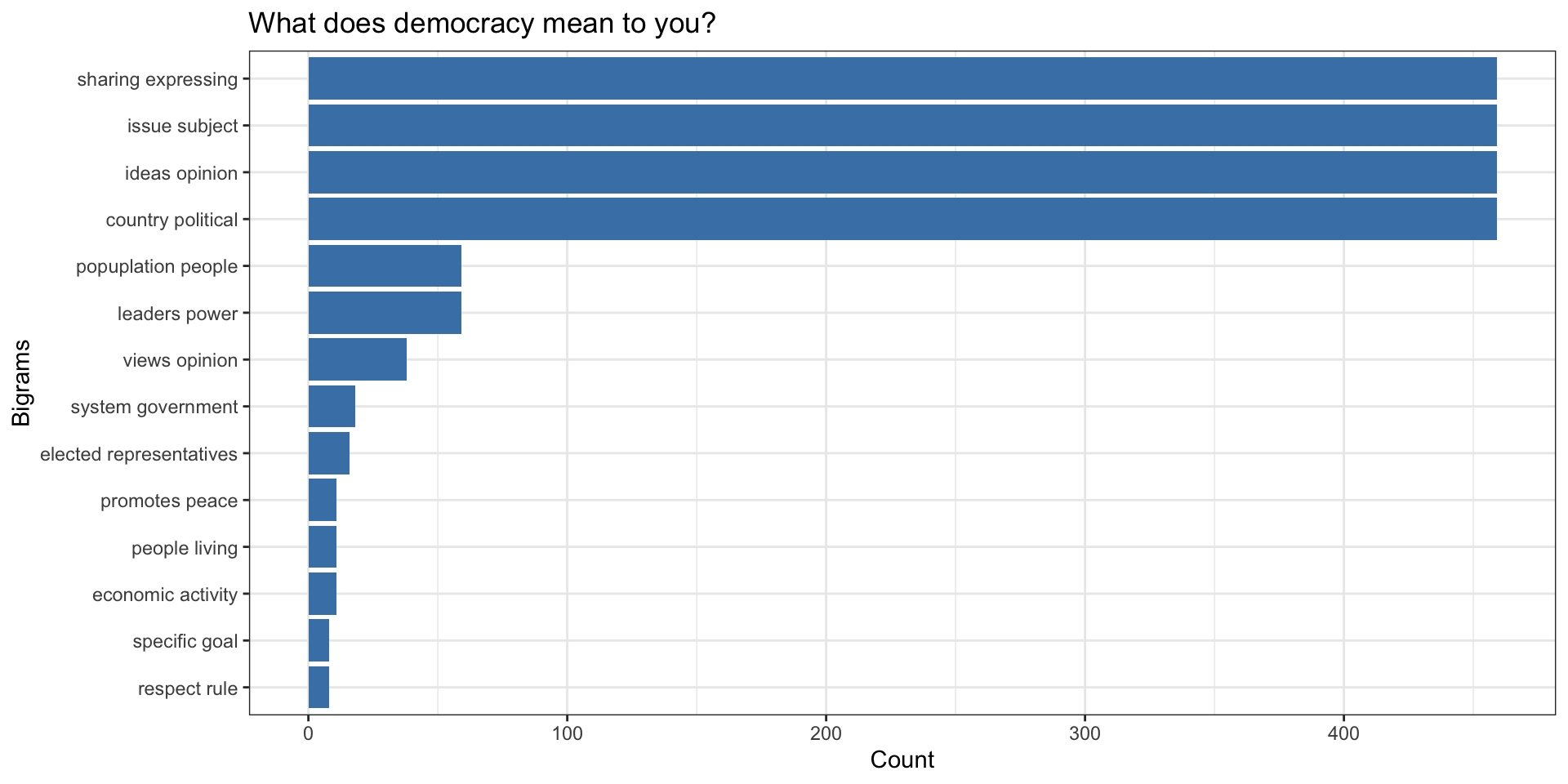

What does democracy mean to you? (

V_Q6_1)- [how do people define democracy, or conceptualize it]

Workflow

Read the responses!

Tokenize: “Un-nest” the text responses so that each row of the data is a word (or pair of words, or triplet of words, “N-grams”)

Remove “stop words” like “the”, etc

Analyze/Visualize tokens

Code responses into groups if helpful and needed

Tokenization

A token is a meaningful unit of text, most often a word, that we are interested in using for further analysis, and tokenization is the process of splitting text into tokens.

Unnest data into tokens

Check out results

# A tibble: 10 × 4

Respondent_Serial word rural ed_binary

<dbl> <chr> <dbl> <chr>

1 720044 it's 0 Secondary or higher

2 720044 a 0 Secondary or higher

3 720044 system 0 Secondary or higher

4 720044 of 0 Secondary or higher

5 720044 government 0 Secondary or higher

6 720044 by 0 Secondary or higher

7 720044 the 0 Secondary or higher

8 720044 popuplation 0 Secondary or higher

9 720044 people 0 Secondary or higher

10 719271 sharing 0 Secondary or higherNgrams

Check out results

# A tibble: 10 × 3

Respondent_Serial word rural

<dbl> <chr> <dbl>

1 720044 it's a 0

2 720044 a system 0

3 720044 system of 0

4 720044 of government 0

5 720044 government by 0

6 720044 by the 0

7 720044 the popuplation 0

8 720044 popuplation people 0

9 719271 sharing expressing 0

10 719271 expressing of 0- Can also un-nest into sentences, if you had longer text

Remove “stop words”

Check out results

# A tibble: 10 × 4

Respondent_Serial word rural ed_binary

<dbl> <chr> <dbl> <chr>

1 720044 system 0 Secondary or higher

2 720044 government 0 Secondary or higher

3 720044 popuplation 0 Secondary or higher

4 720044 people 0 Secondary or higher

5 719271 sharing 0 Secondary or higher

6 719271 expressing 0 Secondary or higher

7 719271 ideas 0 Secondary or higher

8 719271 opinion 0 Secondary or higher

9 719271 mind 0 Secondary or higher

10 719271 issue 0 Secondary or higherRemove Stop words from N-Grams

bigrams_separated <- unNest_2 %>%

separate(word, c("word1", "word2"), sep = " ")

bigrams_filtered <- bigrams_separated %>%

filter(!word1 %in% stop_words$word) %>%

filter(!word2 %in% stop_words$word)

bigrams_united <- bigrams_filtered %>%

unite(bigram, word1, word2, sep = " ")

head(bigrams_united)# A tibble: 6 × 3

Respondent_Serial bigram rural

<dbl> <chr> <dbl>

1 720044 popuplation people 0

2 719271 sharing expressing 0

3 719271 ideas opinion 0

4 719271 issue subject 0

5 719271 country political 0

6 720153 sharing expressing 0Top Words

Code to produce graph

Some words are not needed

- Let’s remove “government” and “political” and “system” and “country”

Top Bigrams

Word clouds

Code

library(wordcloud)

d <- unNest %>%

filter(word != "NA") %>%

filter(word != "government") %>%

filter(word != "system") %>%

filter(word != "political") %>%

filter(word != "country") %>%

count(word, sort = TRUE) %>% ungroup()

wordcloud(d$word, d$n, random.order = FALSE, max.words = 50, colors=brewer.pal(8,"Dark2"))

Co-Occurrence in the same response

Can use n-grams when words are next to one another.

Can also capture when words co-occur in the same response, but not next to one another

library(widyr)

# count words co-occuring within responses

word_pairs <- unNest %>%

pairwise_count(word, Respondent_Serial, sort = TRUE)

words_united <- word_pairs %>%

unite(pairs, item1, item2, sep = " ")

words_united# A tibble: 402 × 2

pairs n

<chr> <dbl>

1 expressing sharing 459

2 ideas sharing 459

3 opinion sharing 459

4 mind sharing 459

5 issue sharing 459

6 subject sharing 459

7 country sharing 459

8 political sharing 459

9 sharing expressing 459

10 ideas expressing 459

# ℹ 392 more rowsQuick break

Sentiment Analysis

tidytext includes dictionaries for evaluating the opinion or emotion in text. Three general-purpose lexicons are:

- AFINN from Finn Årup Nielsen,

- bing from Bing Liu and collaborators, and

- nrc from Saif Mohammad and Peter Turney.

Sentiment Analysis

Sentiment Analysis

Sentiment Analysis

Connect lexicon to Ghana data

myB <- get_sentiments("bing")

ghanaB <- unNest %>%

inner_join(myB) # will merge on word

head(ghanaB)# A tibble: 6 × 5

Respondent_Serial word rural ed_binary sentiment

<dbl> <chr> <dbl> <chr> <chr>

1 719271 issue 0 Secondary or higher negative

2 720153 issue 0 Secondary or higher negative

3 720750 issue 0 Primary or lower negative

4 720852 issue 0 Primary or lower negative

5 722984 issue 1 Secondary or higher negative

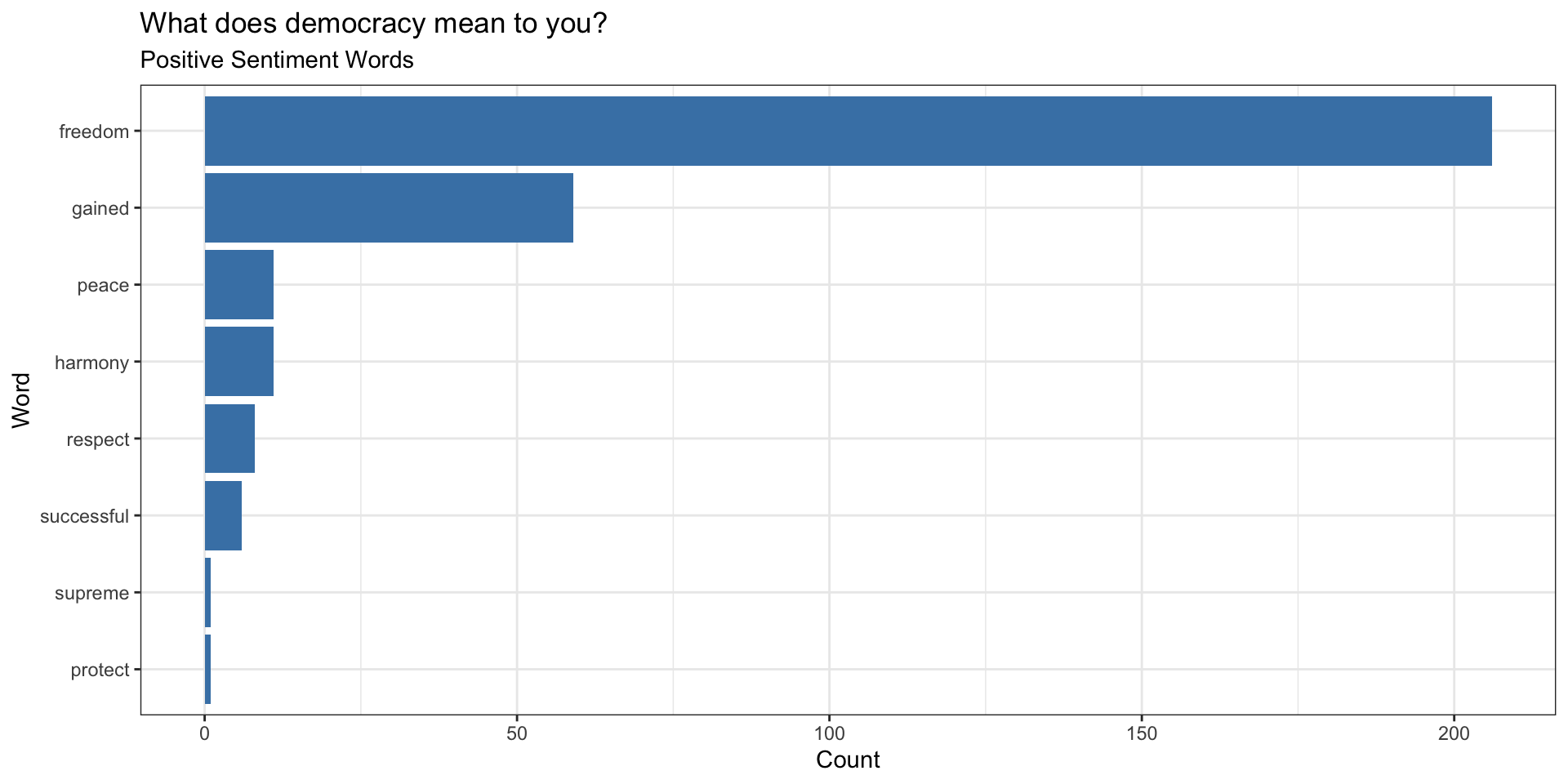

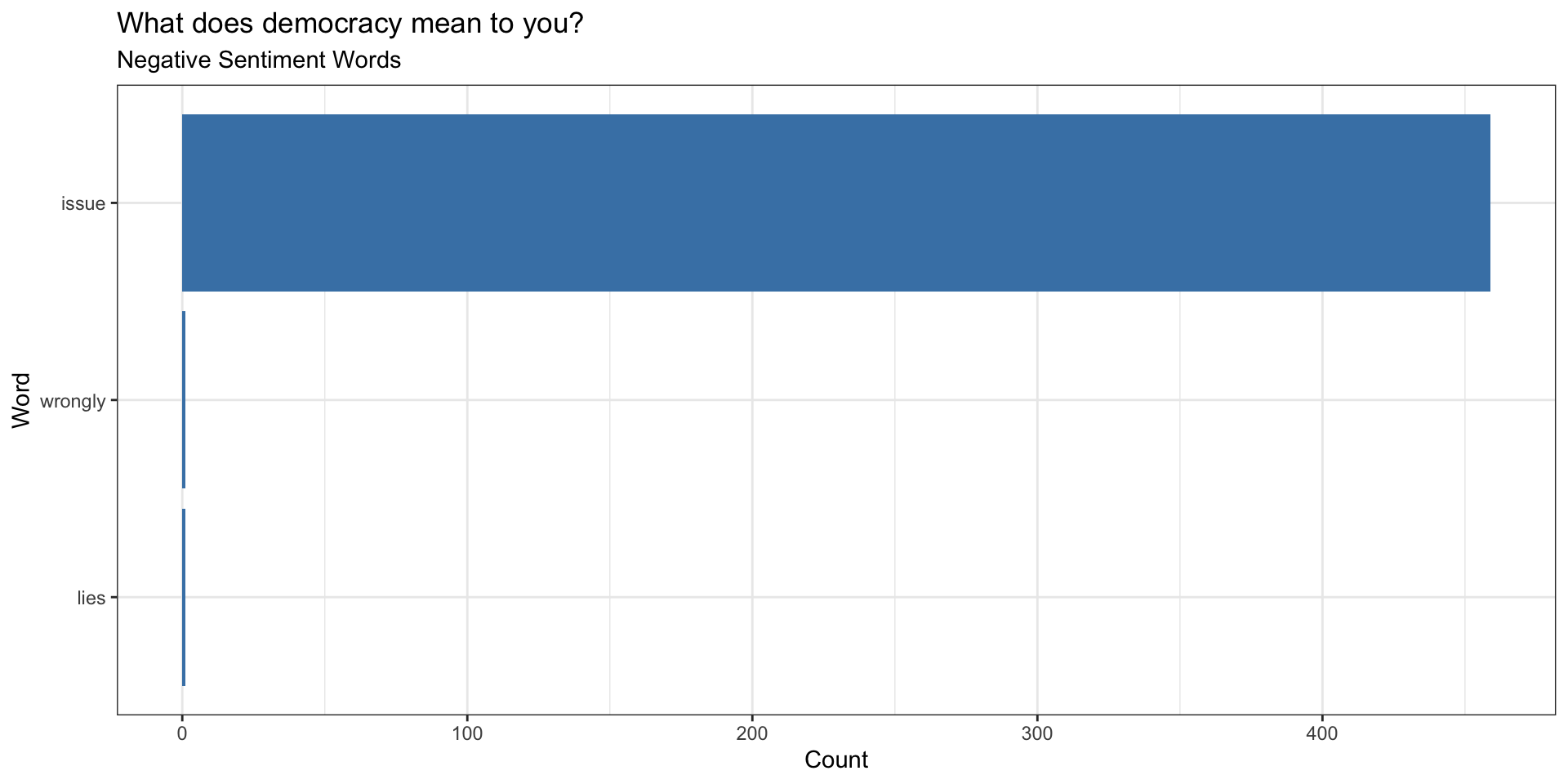

6 722988 issue 1 Primary or lower negative Common Words with Positive Sentiments

Common Words with Negative Sentiments

New Example

The Office data

The Office data

Rows: 55,130

Columns: 12

$ index <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16…

$ season <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,…

$ episode <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,…

$ episode_name <chr> "Pilot", "Pilot", "Pilot", "Pilot", "Pilot", "Pilot",…

$ director <chr> "Ken Kwapis", "Ken Kwapis", "Ken Kwapis", "Ken Kwapis…

$ writer <chr> "Ricky Gervais;Stephen Merchant;Greg Daniels", "Ricky…

$ character <chr> "Michael", "Jim", "Michael", "Jim", "Michael", "Micha…

$ text <chr> "All right Jim. Your quarterlies look very good. How …

$ text_w_direction <chr> "All right Jim. Your quarterlies look very good. How …

$ imdb_rating <dbl> 7.6, 7.6, 7.6, 7.6, 7.6, 7.6, 7.6, 7.6, 7.6, 7.6, 7.6…

$ total_votes <int> 3706, 3706, 3706, 3706, 3706, 3706, 3706, 3706, 3706,…

$ air_date <chr> "2005-03-24", "2005-03-24", "2005-03-24", "2005-03-24…Filter by character

Tokenize

Remove Stop Words

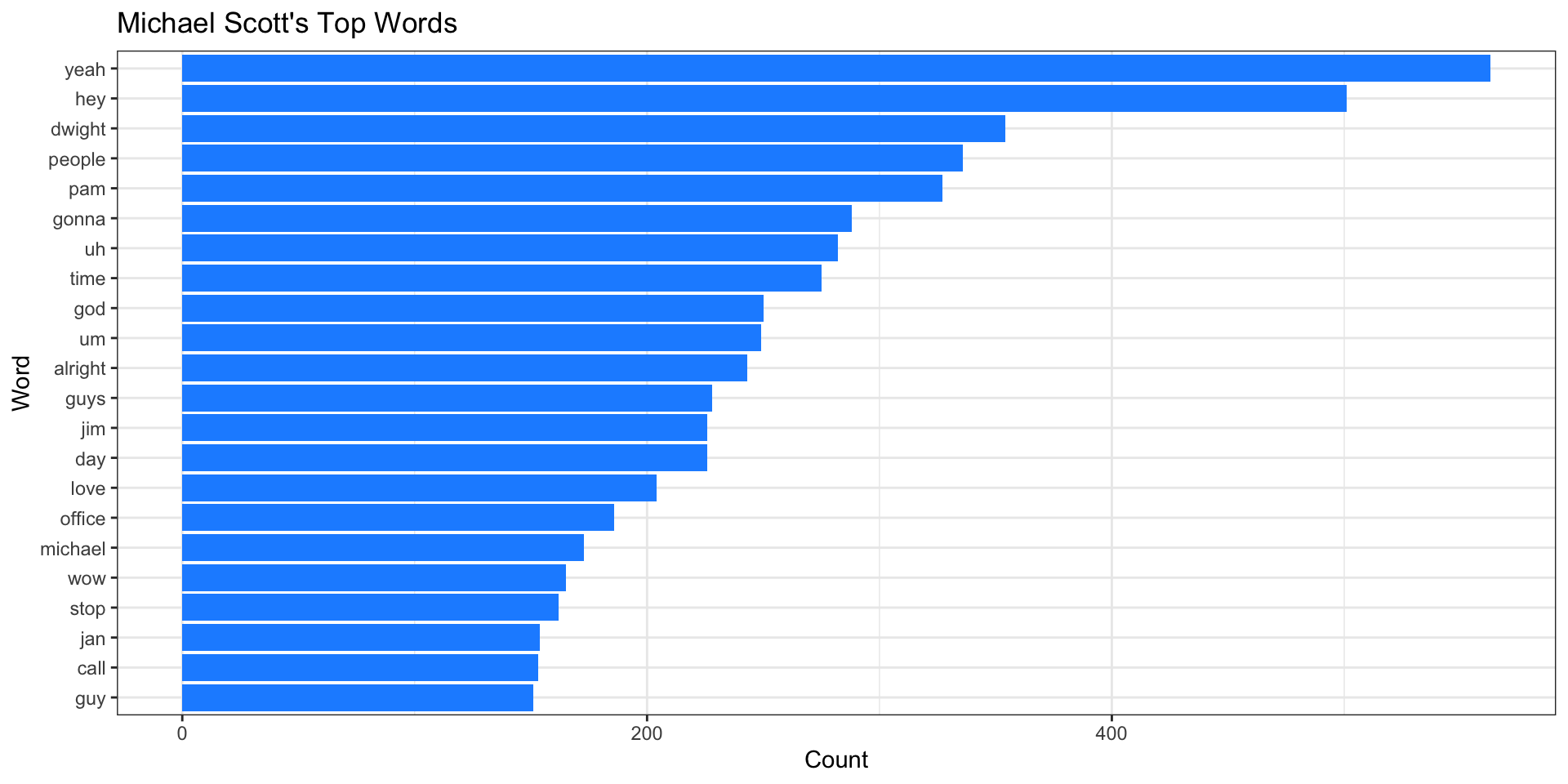

Top Words

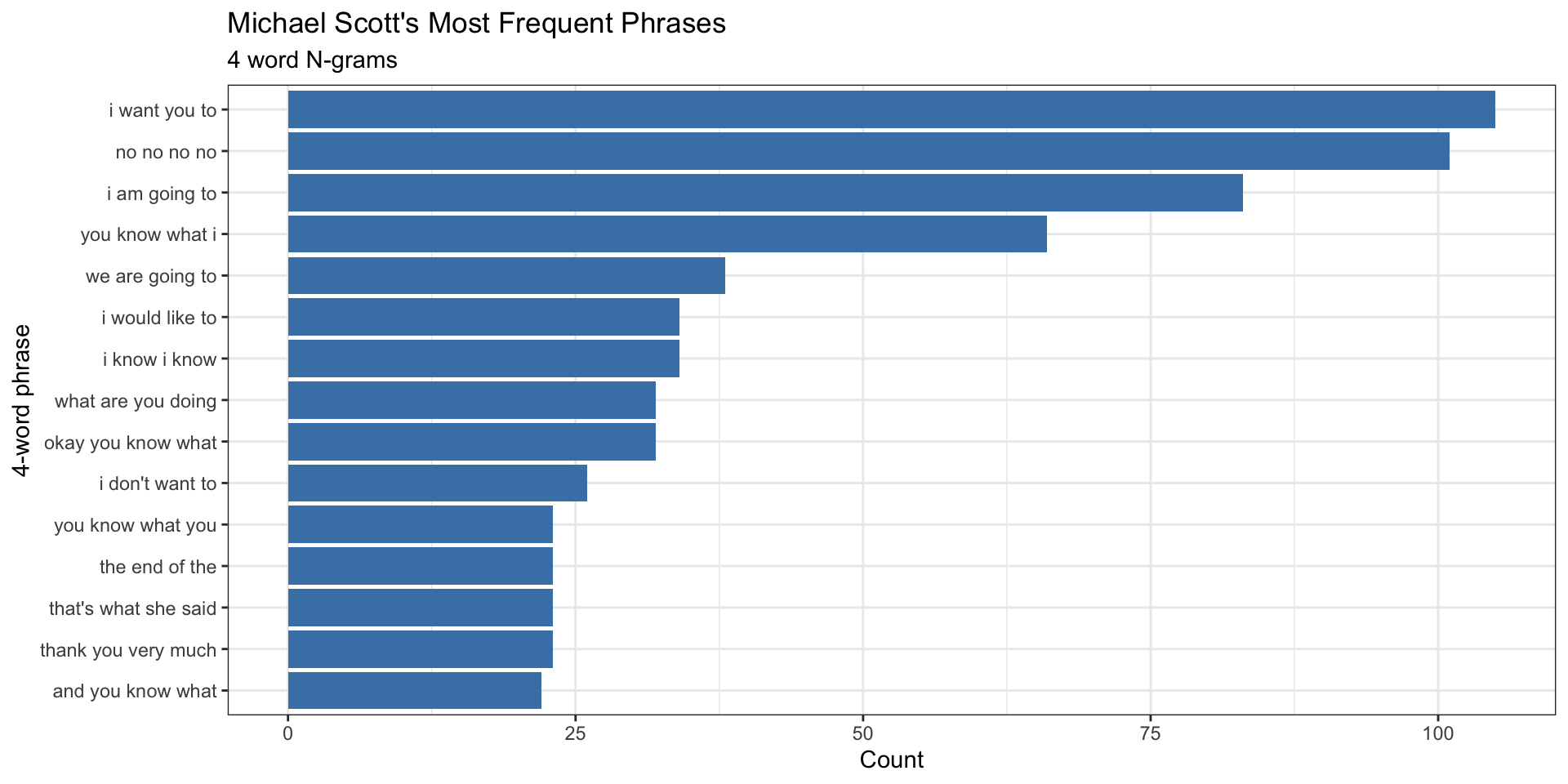

N-Grams (4 words)

N-Grams

Code

unNest_2 %>%

filter(word != "NA") %>%

count(word, sort = TRUE) %>%

filter(n > 20) %>%

mutate(word = reorder(word, n))%>%

ggplot(aes(n, word)) +

geom_col(fill = "steelblue") +

theme_bw() +

labs(x = "Count",

y = "4-word phrase",

title ="Michael Scott's Most Frequent Phrases",

subtitle = "4 word N-grams")

Sentiment Analysis

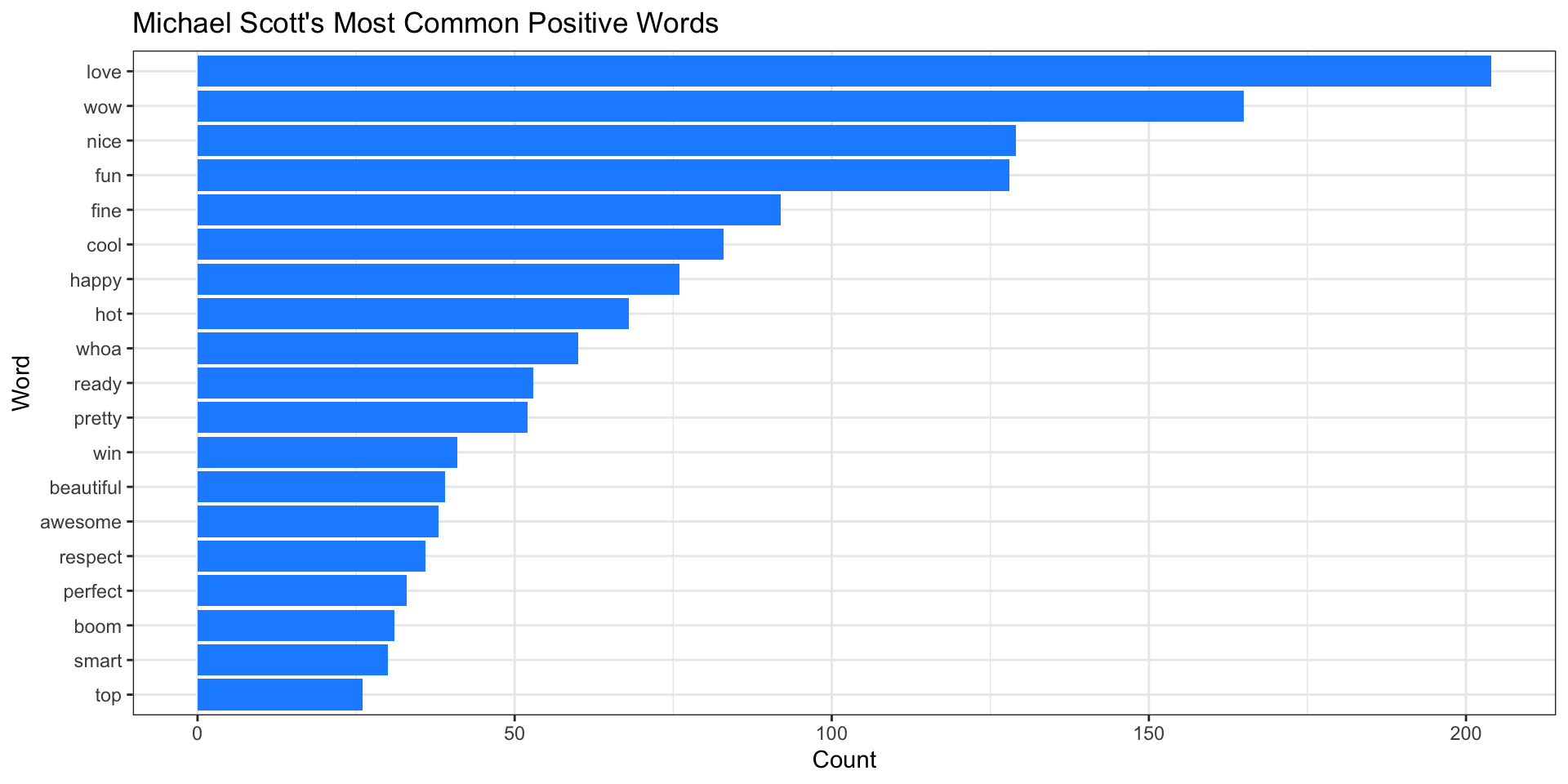

Most Common Positive Words

Code

scottBing %>%

filter(word != "NA") %>%

filter(sentiment == "positive") %>%

count(word, sort = TRUE) %>%

filter(n > 25) %>%

mutate(word = reorder(word, n))%>%

ggplot(aes(n, word)) +

geom_col(fill = "dodgerblue") +

theme_bw() +

labs(x = "Count",

y = "Word",

title ="Michael Scott's Most Common Positive Words")

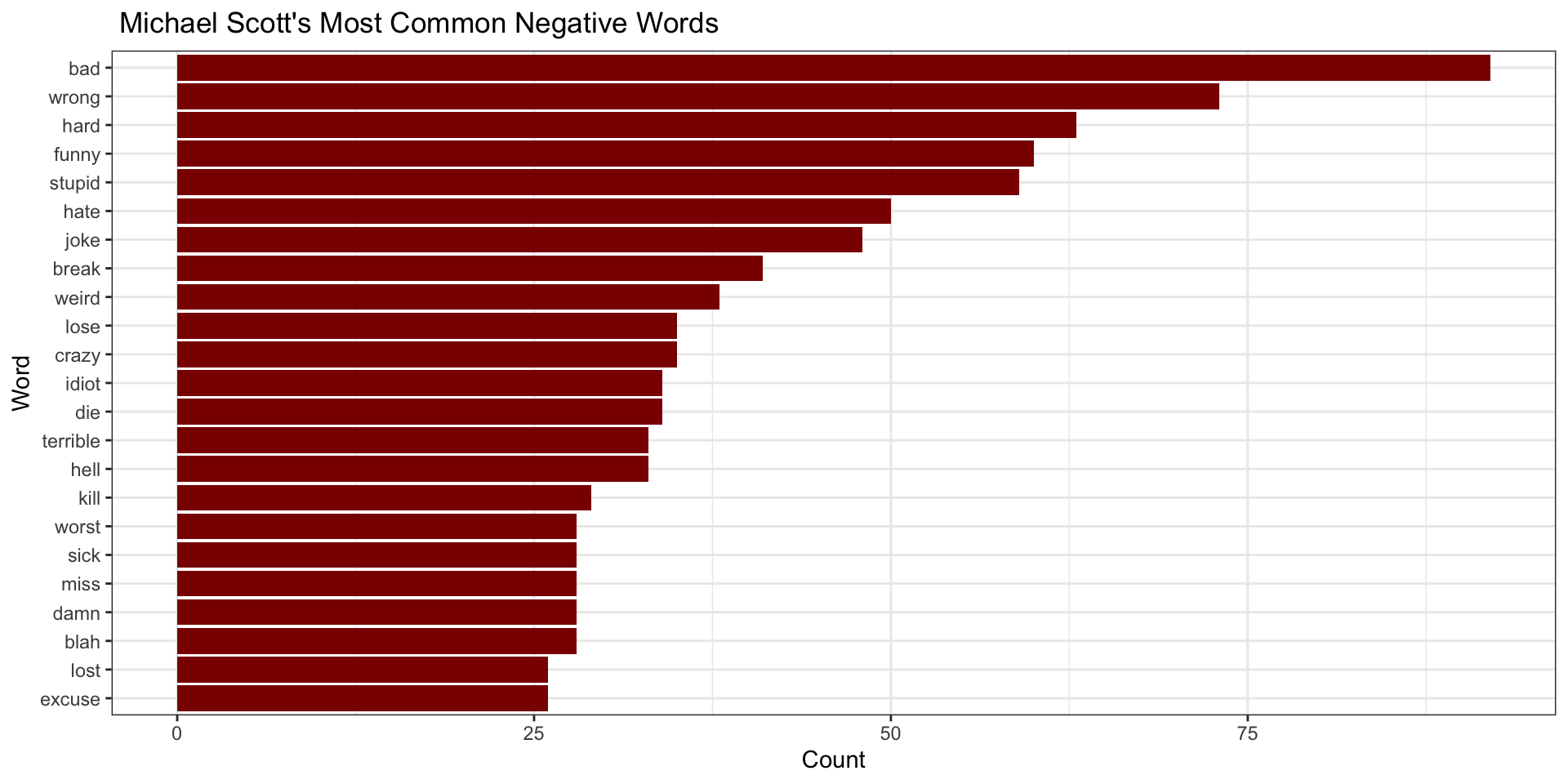

Most Common Negative Words

Code

scottBing %>%

filter(word != "NA") %>%

filter(sentiment == "negative") %>%

count(word, sort = TRUE) %>%

filter(n > 25) %>%

mutate(word = reorder(word, n))%>%

ggplot(aes(n, word)) +

geom_col(fill = "darkred") +

theme_bw() +

labs(x = "Count",

y = "Word",

title =" Michael Scott's Most Common Negative Words")

Posit Cloud

Text Analysis May 19, 2025